What? What's that they said? The actual, empirical data doesn't support the agenda? Well, it's no wonder they avoid it, then! But we do not:There is broad (though not unanimous) agreement, even among skeptic scientists, that the earth has warmed moderately over the past 60 years and that some portion of that warming can be attributed to anthropogenic carbon dioxide emissions. However, despite the hoopla surrounding the recent report on the economic impacts of global warming from the U.N.’s Intergovernmental Panel on Climate Change (IPCC), there is no consensus that temperatures are increasing at an accelerating rate or that we are headed to a climate catastrophe.In fact, far from increasing at an accelerating rate, the best measures of world average temperatures indicate that there has been no significant warming for the past 15 years—something that the IPCC’s climate models are unable to explain.Perhaps frustrated by the climate’s unwillingness to follow the global-warming script, the hard-core advocates for costly, energy-killing programs now point to every weather event as the wages of carbon-emitting sins. However, the numbers tell a different story: Upward trends for extreme weather events just aren’t there.

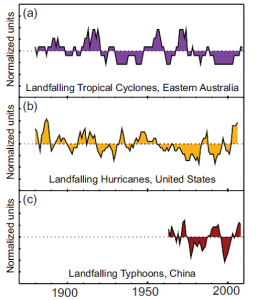

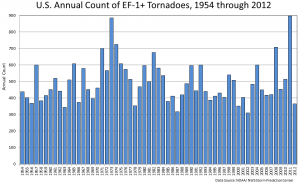

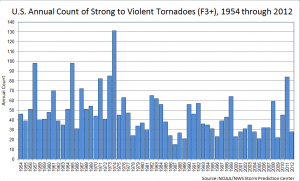

Don't fool yourself into thinking this is simply a political issue, either. Case in point:Myth #1: Hurricanes are becoming more frequent.Even the IPCC notes there is no trend over the past 100 years. Here’s what the IPCC says in its latest science report:Some people will make a big deal about an increase in North Atlantic hurricanes since the 1970s. As the IPCC chart below (Panel b) shows, the 1970s had the lowest frequency of landfalling hurricanes in the past 100 years.Source: IPCC AR5Hurricane Sandy seems to be an argument in a class by itself. It should be noted that Sandy became an extratropical cyclone before it made landfall. Here is what the IPCC says about historical trends in extratropical cyclones: “In summary, confidence in large scale changes in the intensity of extreme extratropical cyclones since 1900 is low.”Also, data from the National Oceanic and Atmospheric Administration (NOAA) covering the years 1851—2004 show that hurricanes made a direct hit on New York State about every 13 years on average. Over the period, there were a total of 12 hurricanes that made landfall along the New York coastline. Five of them were major hurricanes.Myth #2: Tornadoes are becoming more common.That is, once we account for the apparent increase in tornado counts that are due to much improved technology for identifying them, tornadoes occur no more frequently now than in the past.Even more striking is the history of F3 and stronger tornadoes (shown below), which were even less likely to be missed before Doppler radar. That trend is actually down compared to the 1955–1975 period:Myth #3: Droughts are becoming more frequent and more severe.In the recent science report, even the IPCC finds little evidence to support the myth regarding droughts, and it backs off from its support in a previous report. Here is a quote from the AR5:Myth #4: Floods are becoming more frequent and severe.The IPCC’s science report states, “In summary, there continues to be a lack of evidence and thus low confidence regarding the sign of trend in the magnitude and/or frequency of floods on a global scale.”For the U.S., the story is the same. Some places will always be drier and some wetter in comparison to an earlier period. However, for the U.S. overall, there has been no trend. The chart below of the Palmer Hydraulic Drought Index (PHDI) shows no trend for increasing droughts (represented by bars with negative values). From 1930 to 1941, the PHDI was consistently negative and set annual records that have not been matched.Source: National Climatic Data CenterMyth #5: Global warming caused the polar vortex that led to the extreme cold and snow in the eastern half of North America in the winter of 2013–2014.A letter in Science magazine by prominent non-skeptic scientists had this to say about global warming leading to polar vortexes: “It’s an interesting idea, but alternative observational analyses and simulations with climate models have not confirmed the hypothesis, and we do not view the theoretical arguments underlying it as compelling.”After looking at evidence of jet-stream variability (the supposed link between a warming arctic and polar vortexes), authors of a paper in the Quarterly Journal of the Royal Meteorological Society conclude, “When viewed in this longer term context, the variations of recent decades do not appear unusual and recent values of jet latitude and speed are not unprecedented in the historical record.”Climate blogger Anthony Watts summed it up nicely: “Finally a real scientific consensus—everyone agrees that the recent displaced polar vortex wasn’t caused by global warming.”

I'm not going to include more of the story here, but feel free to go read the whole thing at the link above. The key takeaway here is that the crazy Leftists are floating the idea of literally throwing people in jail who fail to see persuasion in the hoax -- yes, I said hoax (see here and here for more) -- that is man-made climate change. It starts as crazy fringe hysteria, but over time these things tend to become accepted if they are repeated loudly enough for long enough. Just ask anyone from the 1950s if they thought prayer in schools would ever be taken away, or if 50+ million unborn babies would be killed in America, or if the words of the Founders would be removed from government buildings simply because they mentioned "God" and were therefore somehow discriminatory. Nah, that person from the 1950s would tell you those suggestions were just the rantings of a crazy lunatic fringe and would never become reality, especially with our treasured freedoms of religion and free speech...An assistant philosophy professor at Rochester Institute of Technology wants to send people who disagree with him about global warming to jail.The professor is Lawrence Torcello. Last week, he published a 900-word-plus essay at an academic website called The Conversation.His main complaint is his belief that certain nefarious, unidentified individuals have organized a “campaign funding misinformation.” Such a campaign, he argues, “ought to be considered criminally negligent.”Torcello, who has a Ph.D. from the University at Buffalo, explains that there are times when criminal negligence and “science misinformation” must be linked. The threat of climate change, he says, is one of those times.

Maybe we should all pay a little more attention to crazy fringe lunatics like Torcello and nip his rantings in the bud, huh?

I want to close with this article from Powerline a couple weeks ago. Read it and understand it because it completely obliterates the key plank in the climate change hoaxer's platform:

Boom. There you have it.Climate alarmism is not based on empirical observation; rather, it is entirely predicated on computer models that are manipulated to generate predictions of significant global warming as a result of increased concentrations of CO2. But a model in itself is evidence of nothing. The model obeys the dictates of its creator. In the case of climate models, we know they are wrong: they don’t accurately reproduce the past, which should be the easy part; they fail to account for many features of the Earth’s present climate; and to the extent that they have generated predictions, those predictions have proven to be wrong. There is therefore no reason why anyone should rely on predictions of future climate that are generated by the models.For a relatively simple and understandable explanation of why the climate models are worthless, check out this article by Dr. Tim Ball at Watts Up With That:Realities about climate models are much more prosaic. They don’t and can’t work because data, knowledge of atmospheric, oceanographic, and extraterrestrial mechanisms, and computer capacity are all totally inadequate. Computer climate models are a waste of time and money.Inadequacies are confirmed by the complete failure of all forecasts, predictions, projections, prognostications, or whatever they call them. It is one thing to waste time and money playing with climate models in a laboratory, where they don’t meet minimum scientific standards, it is another to use their results as the basis for public policies where the economic and social ramifications are devastating. Equally disturbing and unconscionable is the silence of scientists involved in the IPCC who know the vast difference between the scientific limitations and uncertainties and the certainties produced in the Summary for Policymakers (SPM).IPCC scientists knew of the inadequacies from the start. Kevin Trenberth’s response to a report on inadequacies of weather data by the US National Research Council saidIt’s very clear we do not have a climate observing system….This may come as a shock to many people who assume that we do know adequately what’s going on with the climate, but we don’t.This was in response to the February 3, 1999 Report that said,Deficiencies in the accuracy, quality and continuity of the records place serious limitations on the confidence that can be placed in the research results.***

Before leaked emails exposed its climate science manipulations, the Climatic Research Unit (CRU) issued a statement that said,[General circulation models] are complex, three dimensional computer-based models of the atmospheric circulation. Uncertainties in our understanding of climate processes, the natural variability of the climate, and limitations of the GCMs mean that their results are not definite predictions of climate.Phil Jones, Director of the CRU at the time of the leaked emails and former director Tom Wigley, both IPCC members, said,Many of the uncertainties surrounding the causes of climate change will never be resolved because the necessary data are lacking.Stephen Schneider, prominent part of the IPCC from the start said,Uncertainty about feedback mechanisms is one reason why the ultimate goal of climate modeling – forecasting reliably the future of key variables such as temperature and rainfall patterns – is not realizable.[Ed.: Steve Schneider is notorious for having been a hysterical advocate of human-caused global cooling before he became a hysterical advocate of human-caused global warming.] Schneider also set the tone and raised eyebrows when he said in Discover magazine:Scientists need to get some broader based support, to capture the public’s imagination…that, of course, entails getting loads of media coverage. So we have to offer up scary scenarios, make simplified dramatic statements, and make little mention of any doubts we may have…each of us has to decide what the right balance is between being effective and being honest.The IPCC achieved his objective with devastating effect, because they chose effective over honest.Dr. Ball goes on to explain how general circulation models are constructed, and why they are so unreliable:The surface [of the Earth] is covered with a grid and the atmosphere divided into layers. Computer models vary in the size of the grids and the number of layers. They claim a smaller grid provides better results. It doesn’t! If there is no data a finer grid adds nothing. The model needs more real data for each cube and it simply isn’t available. There are no weather stations for at least 70% of the surface and virtually no data above the surface. There are few records of any length anywhere; the models are built on virtually nothing. The grid is so large and crude they can’t include major weather features like thunderstorms, tornados, or even small cyclonic storm systems.One thing I had not realized is that climate models are so complex that they require an unrealistic amount of computer time to perform a single run. As a result, the climateers simply leave out lots of variables:Caspar Ammann said that GCMs (General Circulation Models) took about 1 day of machine time to cover 25 years. On this basis, it is obviously impossible to model the Pliocene-Pleistocene transition (say the last 2 million years) using a GCM as this would take about 219 years of computer time.So you can only run the models if you reduce the number of variables. O’Keefe and Kueter explain.As a result, very few full-scale GCM projections are made. Modelers have developed a variety of short cut techniques to allow them to generate more results. Since the accuracy of full GCM runs is unknown, it is not possible to estimate what impact the use of these short cuts has on the quality of model outputs.Omission of variables allows short runs, but allows manipulation and moves the model further from reality. Which variables do you include? For the IPCC only those that create the results they want.The alarmists’ models cannot withstand scrutiny by qualified scientists who are not in on the scam:Most don’t understand models or the mathematics on which they are built, a fact exploited by promoters of human caused climate change. They are also a major part of the IPCC work not yet investigated by people who work outside climate science. Whenever outsiders investigate, as with statistics and the hockey stick, the gross and inappropriate misuses are exposed.There is much more, but let’s close with this:The IPCC chapter on climate models appears to justify use of the models by saying they show an increase in temperature when CO2 is increased. Of course they do, that is how they’re programmed. Almost every individual component of the model has, by their admission, problems ranging from lack of data, lack of understanding of the mechanisms, and important ones are omitted because of inadequate computer capacity or priorities. The only possible conclusion is that the models were designed to prove the political position that human CO2 was a problem.Scientists involved with producing this result knew the limitations were so severe they precluded the possibility of proving the result. This is clearly set out in the their earlier comments and the IPCC Science Report they produced. They remained silent when the [Summary for Policy Makers] claimed, with high certainty, they knew what was going on with the climate. They had to know this was wrong. They may not have known about the political agenda when they were inveigled into participating, but they had to know when the 1995 [Summary for Policy Makers] was published because Benjamin Santer exploited the SPM bias by rewriting Chapter 8 of the 1995 Report in contradiction to what the members of his chapter team had agreed. The gap widened in subsequent [Summaries for Policy Makers] but they remained silent and therefore complicit.We are witnessing the greatest scandal in the history of science. Someday before long, the discreditable role played by Benjamin Santer, Michael Mann and others will be universally recognized. Until then, governments will continue to funnel billions of dollars to alarmist scientists to reward them for leading the charge for expanded government power.

Bonus fun fact about that "consensus" that is thrown around so frequently in relation to the "fact" that man-made climate change is "settled science:"

Two new scientific research efforts have uncovered five new man-made greenhouse gases that may play a role in climate change and ozone depletion.Wait, wait, wait...I thought the science was settled! But this is an article trying to show support for man-made climate change, and its opening words admit that there are five new greenhouse gases that might factor in here?! How can the science be settled if we don't even know what we don't even know?

Ah, that's right. Because it's not science at all. It's politics, pure and simple.

No comments:

Post a Comment